Cycle Stealing in the Operating System

Cycle Stealing in the Operating System

Defining terms and ideas-

An operating system method called "cycle stealing" enables an I/O device to get to memory without causing the CPU to be interrupted. Cycle stealing involves the I/O device waiting until the central processing unit (CPU) has not utilized the memory bus before using one of those cycles to access the storage space.

Because the CPU is only stopped for a very brief time, performance is not adversely affected.

Purpose and benefits-

Cycle theft serves two key objectives:

To enhance I/O efficiency. Cycle stealing can drastically minimize the time the CPU waits for I/O operations to finish by enabling input/output devices to access storage directly.

To make the CPU available for other tasks. The CPU can be utilized to run other programmer processes while it is not engaged in I/O tasks. It can increase the system's responsiveness and overall efficiency.

Drawbacks

Cycle theft has several problems, including:

Data corruption may become more likely as a result. Data corruption is possible if the I/O device snatches a CPU cycle while the central processing unit transfers information to or reads from memory.

The system's overall performance may suffer as a result. The CPU may become sluggish, and the system's overall performance may suffer if the I/O device steals too many CPU cycles.

Operating System Management of Processors-

An operating system's (OS) ability to manage processors is crucial. It involves managing resources like memory and I/O and scheduling applications to run on the CPU.

The OS must ensure that all processes may run while preventing any particular process from using the CPU exclusively.

The OS must constantly balance the demands of all active activities, making this a challenging undertaking.

An OS can choose from a variety of distinct CPU scheduling strategies.

The complexity of these algorithms and how they prioritize processes differ.

The following are a few of the most popular CPU scheduling algorithms:

- First-come, first-served (FCFS): This CPU scheduling mechanism is the most straightforward. The sequence in which the processes come at the CPU determines their scheduling.

- The algorithm known as "shortest-job-first" (SJF) arranges tasks with the quickest anticipated execution times.

- Round-robin (RR): This algorithm schedules each task for a single time slice, dividing the CPU time into time slices.

- Processes are scheduled using the prioritization scheduling method. Higher-priority processes are scheduled more frequently than lower-priority ones.

The resources that the OS must also manage the process used. Resources like RAM, I/O, and file systems are included.

All processes must have accessibility to the assets they require, and the OS must make sure that no one process monopolizes those resources.

The OS must constantly manage the demands of all active activities, making this a challenging undertaking.

CPU Scheduling Algorithms-

Operating systems employ CPU scheduling algorithms to decide which task will execute on the CPU next. Numerous distinct CPU scheduling techniques exist, each with unique benefits and drawbacks.

The following are a few of the most popular CPU scheduling algorithms.

- FCFS: The FCFS algorithm arranges processes according to the sequence in which they arrive at the CPU. Although it is the most straightforward CPU scheduling strategy, it is frequently not the most effective.

- The SJF algorithm chooses the process with the quickest estimated execution time to be scheduled. Although this approach is more effective than FCFS, it can take time to put into use.

- Round-robin (RR): This algorithm schedules every task for a one-time slice of the CPU, which is divided into time slices. While less efficient than FCFS, this method is more equitable.

- Processes are scheduled according to their priority using priority scheduling. Higher-priority processes are scheduled more frequently than lower-priority ones. Compared to FCFS and SJF, this method is more equitable but can also be trickier.

The system-specific requirements determine the CPU scheduling algorithm to use. In contrast to a system with a batch processor application, a system with an application that operates in real-time can require the employment of a different algorithm.

Contextual Change-

The act of briefly pausing one process and starting another is known as context switching. It is required when the OS wants to allow another process to execute or when an application waits for a read or write operation to finish.

Changing context is a complicated procedure because the OS must both save and restore the status of the paused and resumed processes. During context switching, the OS must also ensure that all information and resources are appropriately maintained.

Switching contexts can be expensive since it takes time to back up and reclaim a process's state. However, the OS must guarantee that all processes can proceed promptly.

How Cycle Stealing Works-

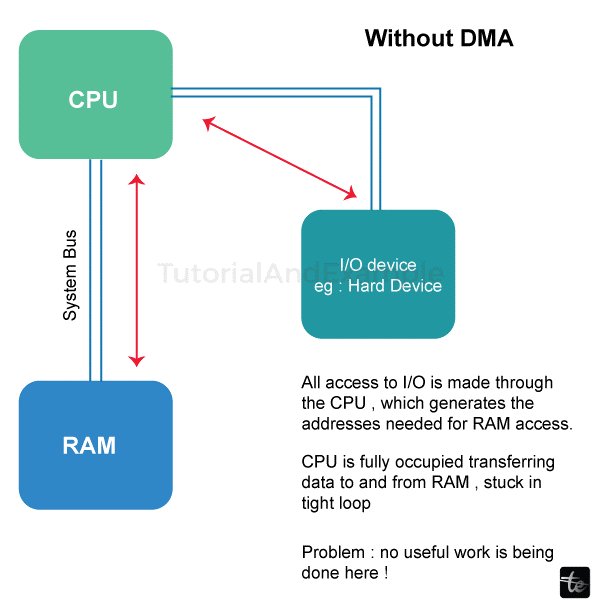

Using cycle stealing instead of direct memory access (DMA), which requires the CPU to completely relinquish control of the memory bus while the I/O device sends data, can be a more effective technique for handling I/O. Cycle stealing can, however, also add delay to the CPU's operation because the CPU may need to wait for the I/O device to complete its access before it can move on.

Cycle stealing is significantly influenced by the operating system (OS). The OS is responsible for scheduling CPU time and ensuring that all system resources are utilized effectively. The OS will interrupt the CPU when an I/O device needs access to memory and hand over the management of the memory bus to the I/O device.

The OS will then monitor the I/O device's performance to ensure it uses only a little memory bus space.

A practical method for enhancing the efficiency of I/O-intensive workloads is cycle stealing. However, cycle stealing should be used because it can add CPU processing latency. The OS controls cycle stealing and ensures it is appropriately utilized.

The following are a few advantages of cycle stealing:

- I/O-intensive applications' performance may be enhanced by it.

- It can lessen the sum of CPU time lost to unnecessary I/O tasks.

- It may allow the CPU to focus on other tasks.

The following are some disadvantages of cycle stealing:

- It can cause the CPU's operation to lag.

- Debugging I/O issues can become more challenging.

- It might lower the system's total throughput.

Use cases of Cycle stealing-

Cycle theft has a variety of applications, such as:

- Cycle stealing is a technique used by graphics cards to access memory when displaying visuals. While the graphics card displays frames, other CPU tasks can still be carried out.

- Cycle stealing is a technique used by network cards to get access to memory for data transfer. While the network card sends data, other processes can still run on the CPU.

- Storage devices: Storage devices, including complex and solid state drives, access memory using cycle stealing to read and write data. While the complex drive device stores or transfers data, the CPU can keep running other activities.

Cycle theft has the following practical uses in the following situations:

- While a video game's graphics card produces frames, the CPU can still handle the game's logic. It allows the game to maintain a smooth frame rate while the graphics card works hard.

- While the network card sends data, the CPU may continue to load websites through a web browser. The browser can load web pages rapidly, even with a sluggish network connection.

- The CPU can perform other tasks in a file transfer program while the complex drive transfers or reads data. It makes it possible for the file transfer to occur in the background while the user is still working.

Cycle stealing is an effective method for enhancing a computer system's performance. Cycle stealing allows I/O devices to use memory without disturbing the CPU, allowing the CPU to work on other tasks, resulting in a more responsive and fluid user experience.

Implementing Cycle Stealing-

The following technological concerns must be brought into account before cycle theft is implemented in an operating system:

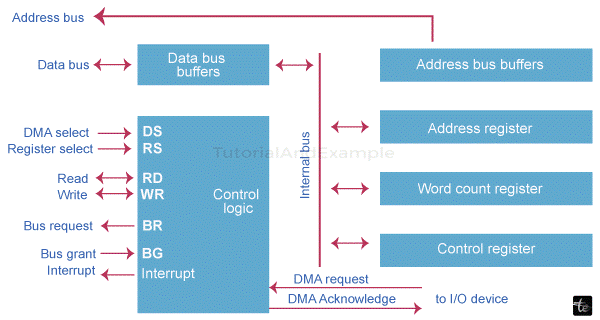

- When the CPU is prepared to access memory, the I/O device must be able to send a signal to the CPU. Usually, this signal is referred to as a DMA request.

- The CPU must recognize DMA requests to hand off control to the I/O device. Typically, this device is referred to as a DMA controller.

- The operating system must manage cycle-stealing I/O devices in some way. It entails monitoring which I/O devices are now cycle stealing and ensuring they do not conflict.

Integration with CPU scheduling:

There are various ways that cycle theft and CPU scheduling might be combined. One method is instructing the CPU scheduler to give processes awaiting I/O priority. It guarantees the quickest feasible memory access for the I/O devices.

Another technique to combine CPU scheduling and cycle stealing is to have the CPU scheduler constantly change a process's priority based on its I/O requirements. It guarantees that the CPU constantly operates at maximum speed while allowing I/O devices to access memory as necessary.

Using cycle theft in an operating system can be demonstrated by the following scenario:

- The I/O device creates a DMA request.

- The CPU recognizes the DMA demand and gives the DMA controller the reins.

- The DMA controller uses address information and data supplied by the input/output (I/O) device to read or write data to or from memory.

- The DMA controller returns control to the CPU once it is finished.

- The CPU resumes the process that was halted by the DMA requests.

Cycle Stealing Performance Evaluation in Operating Systems-

Operating systems use "cycle stealing" to let I/O devices access memory without stopping the CPU. It can boost a computer system's speed by enabling the CPU to work on other tasks while the I/O devices use memory.

The effectiveness of cycle stealing can be measured using a variety of indicators. These consist of the following:

- The rate at which bytes are transported, or throughput.

- When a single byte is transferred, this is known as latency.

- CPU usage: The proportion of time the CPU is in use.

Other I/O strategies, such as interrupt-driven I/O and direct memory access (DMA), can be contrasted with cycle stealing.

Interrupt-driven I/O is the conventional method of I/O. The CPU is interrupted when an I/O device wants to access memory. The CPU then interrupts its current task to handle the interrupt. As a result, the CPU can experience frequent interruptions, which affect performance.

I/O can be done more effectively with DMA. An I/O device must obtain the CPU's permission to access memory.

The CPU then gives the DMA controller authorization and hands over control. Following that, the DMA controller reads or writes data from storage independently of the CPU. As a result, the CPU can carry out additional tasks while the input and output device accesses memory.

Benchmarks and metrics-

Several metrics, such as the following, can be used to assess cycle stealing performance:

- The rate at which bytes are transported, or throughput.

- When a single byte is transferred, this is known as latency.

- CPU usage: The proportion of time the CPU is in use.

Running a benchmark that sends much data between the CPU and an input/output (I/O) device will show throughput and latency. Running a test that employs the CPU to carry out a computationally demanding task can be used to gauge CPU utilization.

Comparisons with Alternative Methods-

Cycle stealing can be contrasted with different I/O strategies, like interrupt-driven I/O and DMA.

- Interrupt-driven I/O: The conventional method for I/O is interrupt-driven I/O. The CPU is interrupted when an I/O device needs to access memory. The CPU then interrupts its current task to handle the interrupt. As a result, the CPU can experience frequent interruptions, which affect performance.

Cycle stealing outperforms interrupt-driven I/O in terms of efficiency because it enables the CPU to conduct additional tasks while the input/output (I/O) device interacts with memory.

- Cycle stealing, as well as interrupt-driven input and output, are both less effective than DMA, which is. An I/O device must obtain approval from the CPU to access memory. The CPU then gives the DMA controller authorization and hands over control. The DMA controller can read or write data from memory independently of the CPU.

- As a result, the CPU can carry out additional tasks while the input/output (I/O) device accesses memory.

DMA can be combined with cycle stealing to enhance performance even more. For instance, the CPU could service interrupts from input/output (I/O) devices that don't employ DMA by cycle stealing. It would enable the CPU to handle interruptions from a greater variety of input/output (I/O) devices without the system slowing down.

Challenges

A variety of difficulties are connected to cycle theft, including:

- Cycle stealing can lengthen the time it requires for the CPU to react to an interrupt, known as interrupt latency. It could concern I/O devices like networking cards and hard drives that demand low latency.

- Cycle stealing can increase CPU overhead, which lowers the CPU's overall performance. It is due to the CPU's need to flip between servicing interrupts and running programs while also keeping track of what I/O devices are cycle stealing.

- Synchronization: Synchronizing cycle stealing with additional procedures, such as leveraging DMA, can be challenging. The CPU must prevent data corruption from happening if it steals cycles from DMA-using processes.

Upcoming developments

Cycle theft may develop in several ways in the future, including:

Improved synchronization among cycle theft and other operations, such as DMA, may utilize novel software and hardware techniques. Cycle theft would be simpler to apply in various applications as a result.

Improved interrupt latency: By reducing the effect of cycle theft on I/O devices that need low latency, new hardware and software solutions could be created to enhance interrupt latency.

Cycle stealing's CPU overhead might be decreased with new hardware and software solutions, boosting the system's overall performance.