Multi-Layer Feed-Forward Neural Network

Introduction

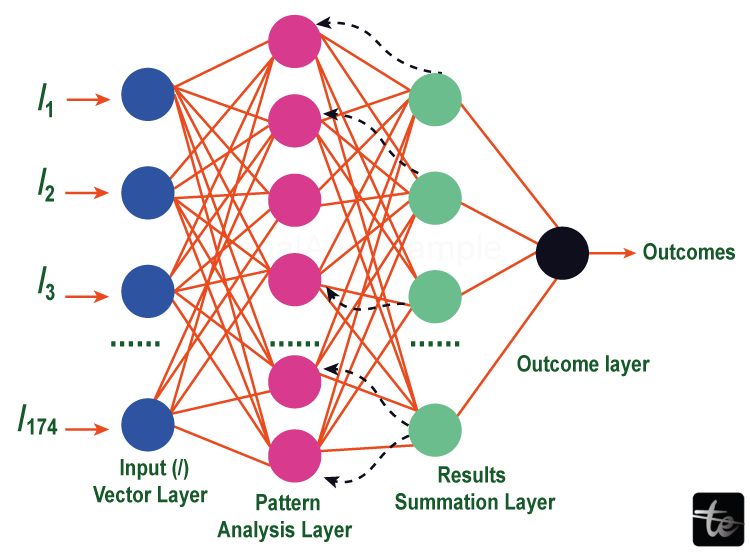

A sort of artificial neural network called a multi-layer feed-forward neural network, sometimes referred to as a multilayer perceptron (MLP), is made up of several layers of linked nodes, or neurons. It is a popular architecture that is used for several machine-learning issues, such as classification, regression, and pattern recognition. The basic components of a network are an input layer, one or more hidden layers, and an output layer.

Numerous neurons make up each layer, and each neuron is linked to neurons in the layers above and below it. Weights are used to represent neural connections and regulate their strength.

- Information moves unidirectionally through the hidden levels and the output layer in a feed-forward network, starting at the input layer. Each neuron takes inputs from the neurons in the layer below, processes the weighted sum of those inputs using an activation function, and then sends the result to the neurons in the layer above.

- The network is given non-linearities via the activation function, which enables it to learn intricate connections between inputs and outputs. MLPs frequently employ the sigmoid, hyperbolic tangent (tanh), and rectified linear unit (ReLU) as activation functions.

Components

- Input layer: The input layer, or the first layer of the network, is where input data is fed. A characteristic or property of the input data is represented by each neuron in the input layer.

- Hidden layers: Layers wedged between the input and output layers are known as hidden layers. Numerous neurons, also known as units or nodes, make up each buried layer and process the input data. Complex patterns in the data are learned and captured by the hidden layers.

- Neurons: The simplest computing elements of a network are called neurons. Each neuron takes in information from the neurons in the layer below, adds up the information using a weighted total, and then sends the information via an activation function to create an output. The network may learn intricate associations thanks to the activation function's introduction of non-linearity.

- Activation Functions: The network becomes non-linear due to activation functions. They convert a neuron's weighted sum of inputs into its output. Sigmoid, hyperbolic tangent (tanh), rectified linear unit (ReLU), and softmax are often used activation functions in multi-layer feed-forward neural networks (for the output layer in classification tasks).

- Output layer: Final layer of the network is the output layer, which generates the expected results based on calculations made in earlier levels. The job at hand determines how many neurons are present in the output layer. For instance, although in multi-class classification there may be several neurons, each indicating the likelihood of a distinct class, in binary classification just one neuron may represent the chance of a single class.

Working

- Input data: Raw data (such as photos, text, or other types of data) or numerical characteristics can both be used as inputs to neural networks.

- Activation and Weighted Sum: The incoming data is multiplied by the appropriate weights before being summed. This weighted total and a bias term are sent to each hidden layer neuron. The weighted sum is then run through an activation function to induce non-linearity and provide an output (e.g., sigmoid, ReLU).

- Forward Propagation: In this method, the output from one layer serves as the input for the next. Up until the output layer is reached, the weighted sum, activation, and transfer of the output to the subsequent layer are repeated.

- Output Layer: The neural network's ultimate prediction or output is produced by the output layer. The job determines how many neurons are in the output layer. For instance, although in multi-class classification there may be several neurons, each indicating the likelihood of a distinct class, in binary classification just one neuron may represent the chance of a single class.

- Loss Calculation: The output layer's anticipated outputs are compared to the training data's actual outputs (labels) to determine the loss. Using a loss function, it is possible to calculate the discrepancy between predicted and actual outputs. The task will decide on the best loss function, such as cross-entropy loss for classification jobs or mean square error (MSE) for regression tasks.

- Backpropagation: The gradients of the loss function are calculated with respect to the weights and biases of the network using the chain rule of calculus. The gradients show the relationship between the loss and the network parameters. Layer by layer, backpropagation propagates these gradients across the network and determines the weights and biases of each layer.

- Updates to Parameters: The weights and biases in the network are updated using the gradients gained through backpropagation. Usually, an optimization technique like stochastic gradient descent (SGD) is used to carry out the update. To reduce the loss function, the method modifies the parameters in the opposite direction as the gradients.

- Training in iterations: The steps from 3 to 7 are repeated throughout the course of several epochs. The projected outputs are computed by forward propagation, the gradients are computed by backward propagation, and the weights and biases are modified via parameter updates. The network can learn and enhance its predictions thanks to the iterative training process.

- Prediction: After training, the network is prepared to make predictions based on upcoming inputs. To provide predictions for the new inputs, forward propagation is carried out utilizing the learned biases and weights.

Applications

- Image Classification

- Natural Language Processing (NLP):

- Financial Forecasting

- Fraud Detection

- Medical Diagnosis

- Handwriting Recognition

- Recommender Systems