Elements of Parallel Computing in Cloud Computing

Introduction

Introduction

Parallel computing is a paradigm shift in how computational tasks are approached, from sequential processes into simultaneous interconnected operations. This method is particularly significant in the era of sophisticated computing, where demand for processing power has skyrocketed. Parallel computing is basically a technique or methodology that allows the simultaneous execution of several calculations or processes at once, thereby multiplying computational speed and efficiency by orders of magnitude.

Parallel computing, at heart, utilizes concurrency to harness the power of many processors and break down complex problems into smaller tasks that can be run right after each other. As opposed to sequential computing, parallel computing utilizes the divide and conquer strategy, where tasks are not performed one after the other. Every subtask is operated in parallel, decreasing the total time spent on calculation.

Parallelism fits naturally in the world of cloud computing. Parallel processing can benefit from the large and scalable resources of Cloud environments. The cloud creates that much-needed infrastructure and flexibility in distributing tasks to a number of computing units, thus ensuring efficient use of resources.

Parallelism is based on the use of multiple processing units that act together in order to perform a particular task. These processing units may be from one core within a single processor to a number of processors located in the distributed network. The aim is concurrent execution, in which each unit helps complete the overall computation.

Types of Memory

Types of Memory

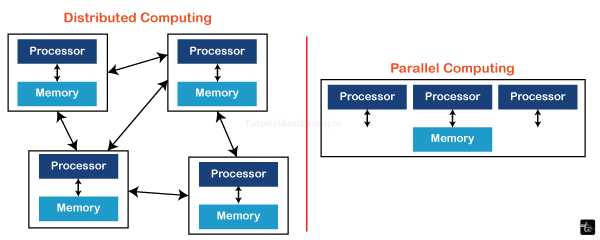

There are two primary models of parallelism: shared memory and distributed memory. In shared memory systems, multiple processors can access a common memory space where they have the opportunity to share information with no effort. In contrast, distributed memory systems involve independent processors that communicate through message passing. Often, cloud computing is based on distributed memory models to allow for concurrent resources over geographically remote locations.

Overview of Cloud Computing

Cloud computing can be described as a transformative force when it comes to information technology, and this is because of its dynamic nature in addition to being scalable with regard to management or accessing the use of computer resources over the internet. In essence, cloud computing is the opposite of typical on-premises infrastructure; it introduces a model in which resources are provided as services to drive agility, efficiency, and innovation.

Key Characteristics of Cloud Computing

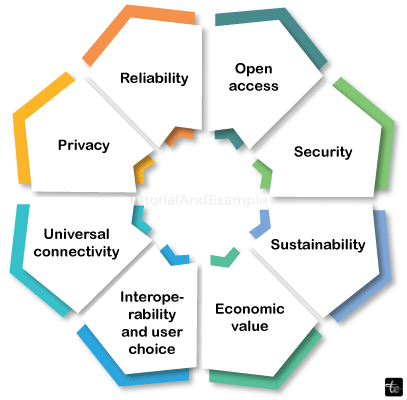

- On-Demand Self-Service: Its on-demand nature makes it extremely flexible and responsive to current requirements.

- Broad Network Access: Cloud services are available across the network and can be used by a number of devices like laptops, smartphones, tablets, etc. This omnipresent availability enables the possibility to operate and collaborate remotely.

- Resource Pooling: In cloud environments, resources are pooled together for the serving of several consumers with different requirements dynamically assigned according to request. This multi-tenant model reduces wastage of resources and improves efficiency.

- Rapid Elasticity: Cloud services can rapidly increase or decrease according to demand fluctuations. This elasticity provides organizations the efficiency to easily deal with high workloads without overstocking resources at lower demand times.

- Measured Service: Cloud computing resources are metered; therefore, providers can monitor, control, and optimal utilize the resources. Users are charged for the real amount used, which is cost-effective and transparent.

- Service Models: Cloud computing provides various service models entailing Infrastructure as a Service (IaaS) and platform as a These models serve different users’ needs ranging from basic infrastructure to fully managed applications.

- Deployment Models: Cloud services can be installed in various architectures such as public cloud, private cloud, hybrid, and multi-cloud. Each model has unique features regarding control, customization, and security.

Cloud Service Models (IaaS, PaaS, SaaS)

Infrastructure as a Service (IaaS)

Infrastructure as a Service delivers users virtual computing resources over the internet. It provides basic bricks like virtual machines, storage, and networking. This means that the users are able to control how the operating system, applications, and configurations will run.

IaaS is perfect for organizations that need scalable infrastructure but want to avoid dealing with hardware management.

Platform as a Service (PaaS)

PaaS offers a fully integrated environment for building, testing, and deploying applications, facilitating the development life cycle. Developers do not have to worry about the intricacies of infrastructure management and can concentrate on coding and innovation.

Software as a Service (SaaS)

Software as a Service provides fully functioning applications over the network on subscription. These applications can be accessed through a web browser, therefore eliminating the need to install them locally. SaaS is marked with centralized maintenance and auto-updates, and it can be accessed from different devices.

This model is easy to use and affordable and ensures that the software will always be up-to-date.

Cloud Deployment Models (Public, Private)

Public Cloud

Third-party providers deliver public cloud services over the Internet, and they are accessible to any member of society. Cloud services can be owned, operated, and maintained by the cloud service provider – these are scalable and cost-effective. Public clouds work great for organizations that have highly variable loads and demand speedy scaling as well as availability.

Private Cloud

Private clouds are dedicated environments that only one organization utilizes. These clouds can be in-house or with a third-party hosting provider. Private clouds provide more control, security, and personalization as they are suitable for businesses that have specific regulation or compliance needs.

Intersection of Parallel Computing and Cloud Computing

Parallel computing and cloud computing are two very big technological paradigms that intersect to create a synergistic approach to enhancing performance, scalability, and efficiency while handling complex computational tasks.

Shared Resources and Scalability

There is an emphasis on resource sharing and scalability in both parallel and cloud computing. Parallel computing involves breaking down tasks into smaller subtasks processed at the same time, and cloud computing offers on-demand availability of a shared pool of computer resources. This intersection to gain the benefits of parallelism in a cloud environment that can dynamically scale.

High-Performance Computing (HPC)

Parallel computing is like High-Performance Computing, but it deals with the concurrent running of tasks to get results more quickly. Parallelism is complemented by cloud computing with its variety of resources that can provide the appropriate infrastructure for parallel applications.

Dynamic Resource Allocation

Finally, cloud computing dynamic resource allocation perfectly fits parallel computing requirement for distributed resources. Parallel applications can also scale dynamically based on demand, using additional cloud resources when workloads increase and releasing them when the demands decrease.

Task Parallelism in Cloud Environments

Using task parallelism, i.e., forms of parallel computing where multiple different tasks are run in tandem, is a common practice for cloud platforms as well. Tasks can be delegated to several virtual machines or containers in the cloud infrastructure.

Hybrid Architectures

The intersection involves hybrid setups where organizations apply parallel computing strategies within a cloud infrastructure. This hybrid strategy provides the flexibility to select among in-house parallel structured systems or cloud resources according to certain computational needs and financial considerations.

Data Parallelism and Cloud Storage

Parallel computing emphasizes data parallelism, which is complemented by cloud storage solutions. Huge datasets can be stored effectively on cloud storage and processed in parallel algorithms simultaneously.

Scalability in Cloud Parallelism

Modern computing environments should focus on scalability, and when parallelism is introduced into cloud environments, these two features enable opportunities for efficiently handling various kinds of workloads that were previously impossible. This combination of scalability and parallelism in the cloud creates a flexible, responsive computing paradigm.

Dynamic Resource Allocation

At the heart of scaling in cloud parallelism is the dynamic allocation of resources based on demand. Cloud platforms’ ability to scale computing resources vertically is achieved by increasing the power of individual virtual machines or horizontally on availability, which means adding more instances.

Elasticity for Workload Variability

Elasticity equals the scalability of cloud parallelism, which gracefully handles the workload variation. Parallelism-oriented applications can scale dynamically according to the varying workload. This ensures optimal use of resources during peak demand periods and resource conservation, helping to manage cost-effective operations.

Task Parallelism and Load Balancing

Parallel computing relies on another fundamental concept called task parallelism, which fits neatly into cloud-based load-balancing mechanisms. Scalability in cloud parallelism refers to the idea of spreading tasks effectively across available resources so that there are no bottlenecks and resource usage is uniform.

Auto-Scaling and Parallel Efficiency

Auto-scaling functions in cloud environments support the efficiency objectives of parallel computing. As the level of workload increases, auto-scaling functionalities can automatically provision more resources for parallel applications to continue performing at peak efficiency.

Horizontal Scaling for Parallel Applications

Horizontal scaling obtained by adding more computing instances is one of the fundamental concepts in scalability towards parallel applications designed for horizontal scale out; this approach splits tasks across multiple computing instances to achieve exploitable parallelism.

Cost-Effective Resource Management

High scalability in cloud parallelism helps resource management to be cost-efficient because resources are scaled according to true demand without having them over-provisioned. This pay-as-you-go model is in line with both scalability and parallelism.

Parallel Algorithms for Cloud Services

MapReduce Paradigm

MapReduce is a basic parallel algorithm invented by Google and widely used in cloud computing services. It breaks down complex tasks into smaller sub-tasks, distributes the latter across multiple nodes, and integrates the results.

Parallel Sorting Techniques

Large-scale datasets are often sorted in cloud computing, and parallel algorithms provide effective resolutions to the problem. Parallel sorting techniques divide the process of organizing data into smaller tasks for multiple nodes, hence faster performance.

Parallel Machine Learning Algorithms

Parallel algorithms for model training and prediction are a valuable component of many cloud services, especially machine learning. Distribution of parallel processing algorithms, including stochastic gradient descent and k-means clustering, the power embedded in cloud platforms leverages distributed computing to accelerate model convergence rate as well as improve prediction precision.

Parallel Graph Processing

Parallel graph processing algorithms are used by cloud services to work with complex relationships that involve social networks or recommendation systems. Such algorithms, such as the Bulk Synchronous Parallel BSP model, allow efficient traversing and analyzing of large-scale graphs, supporting applications that need intensive graph computation in the cloud.

Parallel Search and Retrieval

Many cloud-based services depend on efficient search and retrieval operations. Parallel search algorithms, for instance, parallel binary searching or parallaxes tree searches, improve the pace at which information is located and retrieved from enormous datasets.

Dynamic Load Balancing Algorithms

In a cloud environment, equitable distribution of tasks across resources is critical for optimal performance. Dynamic load balancing algorithms based on parallelism monitor the use of different nodes' resources and redistribute tasks continuously in order to maintain balance.

Parallel Encryption and Security Algorithms

On the other hand, parallel algorithms help to ensure security in cloud computing by ensuring that data is securely encrypted and decrypted via a parallel process. These algorithms divide cryptographic operations into parts that can be run in parallel, thereby allowing data to remain protected while computational resources are efficiently used.

Challenges in Implementing Parallel Computing in the Cloud Computing

Data Dependency and Communication Overhead

Parallel algorithms usually imply that a task is divided into several smaller tasks to be processed in parallel. Nevertheless, handling dependencies between these tasks and the communication load that accompanies them is not trivial.

Load Balancing Across Nodes

Efficient parallel computing thus requires task distribution evenly across its multiple nodes. Load imbalances, i.e., when some nodes are under-utilized and others overloaded due to differences in complexities of tasks or data distribution, can occur. To cope with this problem, efficient algorithms for load balancing are needed to ensure the best possible utilization of resources.

Scalability and Resource Allocation

Scalability poses to be a very problematic task in cloud-based parallel computing due to the dynamic nature of the work involved. Sophisticated mechanisms are needed to allocate resources effectively so as to accommodate the varying workloads and demand fluctuations. Lack of adequate scalability planning can lead to wasted resources or poor performance during peak loads.

Synchronization and Consistency

Keeping synchronization and consistency among distributed nodes is one of the key concerns in parallel computing. Concurrent updates, race conditions, or synchronization delays can compromise the integrity of computations through inconsistent results. Effective synchronization mechanisms play a critical role in ensuring reliable parallel processing.

Fault Tolerance and Resilience

Hardware failures, network disruptions, or software errors can happen in cloud environments. It is difficult to ensure fault tolerance and resilience in parallel computing tasks, especially when their processing is accorded across multiple nodes. To minimize the effects of failures, it is necessary to apply strategies like checkpointing, replication, and recovery mechanisms.

Complexity of Parallel Programming Models

Parallel algorithms and applications require knowledge of parallel programming models. Adding to the level of difficulty in these sorts of models, such as Map-Reduce or Bulk Synchronous Parallel, often becomes a stumbling block for programmers, and hence, simplifying this parallelism trend and secure programming frameworks so that people can adopt easier is critical going forward.

Advantages of Parallel Cloud Computing

Enhanced Performance and Speed

Parallel computing enables performing several tasks simultaneously on a set of processors or nodes, enabling the operations to run much faster than they would in traditionally linear settings. It means that processing large datasets and complex algorithms is faster, providing quick insights as well as efficient workflows.

Scalability for Growing Workloads

Concerning cloud-based parallelism, this approach is excellent in scalability as it smoothly adapts to increasing workloads. As the demand grows, more resources can be allocated dynamically to ensure optimal performance even without making large hardware investments. This scalability is important in dealing with different workloads and as business needs change.

Efficient Resource Utilization

Parallel cloud computing optimizes the utilization of resources by allocating tasks across several nodes. This ensures efficient usage of computing resources, reducing idle time and increasing throughput. Cost-effective and sustainable cloud infrastructure also benefits from enhanced resource utilization.

Complex Problem Solving

Parallelism is particularly useful when a given problem requires much computation and involves independent or semi-independent subtasks. Parallel processing is advantageous for tasks such as simulations, data analytics, and scientific computations because they can be parallelized to speed up results.

Increased Throughput and Capacity

By using parallelism, cloud environments can deal with an increased number of tasks concurrently. As a result, organizations can handle bigger volumes of data and perform more calculations using the same space without affecting performance.

Response to Real-time Requirements

Parallel cloud computing is appropriate for applications with real-time processing needs. Organizations can meet stringent deadlines as well as respond to time-sensitive data processing needs such as financial transactions, video streaming, or real-time analytics by parallelizing tasks.

Improved Data Analytics and Machine Learning

Parallel processing is beneficial for data-intensive tasks that include analytics and machine learning algorithms. Cloud platforms with parallel functions enable researchers and data scientists to analyze gigantic datasets effectively, train sophisticated models, and interpret the results properly.

Future Trends and Innovations

Quantum Parallelism Integration

Quantum parallelism relying on the unique characteristics of qubits may transform how complex calculations are managed within the cloud. As quantum technologies continue to develop, their integration into cloud designs could unlock unparalleled advancements in terms of computational power and solving problems.

Edge Computing Synergy

Parallel computing and edge computing are likely to strengthen their synergy. Edge computing moves the computational resources closer to end-users, thus latency and enhancing real-time processing are decreased. Edge environments that support parallelism will allow effective task distribution across edge devices, leading to improved system performance and responsiveness in general.

Specialized Hardware Accelerators

Specialized hardware accelerators designed for parallel workloads will become more prevalent. Graphics Processing Units GPUs, Field-Programmable Gate Arrays FPGAs, and other accelerators will also be optimized for parallel computing tasks, offering dedicated resources to particular types of calculations like artificial intelligence deep learning.

Hybrid Parallel-Quantum Cloud Architectures

Hybrid cloud architectures that combine classical parallel computing with emerging quantum computing capabilities will be the focus. These architectures will enable organizations to exploit the advantages of both classical and quantum parallelism to address a wider set of computational challenges with potential superior efficiency.

Containerization and Microservices

Parallel cloud computing will make use of containerization technologies, including Docker and Kubernetes. Encapsulating the parallelized applications into containers allows easy deployment, scaling, and management. Microservices architectures will also enhance flexibility and agility while dealing with parallel workloads.

Quantum-Safe Cryptography

With the usage of quantum computing, problems on how secure our current cryptographic systems are have appeared. Quantum-safe cryptography techniques in parallel cloud computing will also be used for data security as future trends. This is by adopting cryptographic algorithms that remain secure despite the existence of powerful quantum computers.

Autonomic Computing and Self-Optimizing Systems

Developing self-optimizing systems in parallel cloud environments will be driven by autonomic computing principles. These systems will be intelligent in that they would have the ability to dynamically reconfigure resources, distribute workloads and optimize performance based on varying changes of demand. We strive to build self-aware and self-adjusting computing infrastructures.

Energy-Efficient Parallelism

Parallel cloud computing will intensify energy efficiency efforts. Hardware design, cooling technologies and power management strategies innovations will target minimizing the environmental impact of parallel computing operations. Future parallel cloud architectures will incorporate green computing practices.

Interdisciplinary Collaboration

Since parallel computing applications encompass a range of different domains, interdisciplinary cooperation between computer experts and domain specialists will be further marked. This collaborated method will result in developing personalized parallel solutions for individual industries like healthcare, financials and scientific research.

Conclusion

In conclusion, the integration of parallel computing with cloud infrastructure marks a transformative era in computational capabilities. The elements discussed, from scalable parallel algorithms to the challenges of implementation, form the building blocks of a dynamic and efficient computing paradigm. As advancements such as quantum integration and specialized hardware accelerators unfold, the synergy between parallelism and the cloud is poised to redefine the boundaries of computational power.