Tensorflow tf Data Dataset from tensor slices()

Introduction to Tensorflow:

The Google Brain team created the well-known open-supply deep mastering bundle called TensorFlow. It is supposed to make it less complicated to build, train, and use systems to get to know models, in particular deep neural networks.

TensorFlow is significantly applied in academia and business for many applications, such as laptop imaginative and prescient, herbal language processing, speech recognition,etc.

We should first understand TensorFlow's larger image and statistics waft to proceed. To create and train systems to learn models, Google created the open-source deep-gaining knowledge of package deals referred to as TensorFlow.

The function's signature is as follows:

Tf.data.Dataset.from_tensor_slices(tensors)

The step-by-step working of tf. Data.Dataset.from_tensor_slices():

1. Input Data Preparation:

Your data must first be prepared as one or more tensors. The magnitude of these tensors in the first dimension, or the number of samples or elements, must be the same. For instance, you might use a NumPy array with the structure (num_samples, image_height, image_width, num_channels) to represent a collection of photos.

2. Creating the Dataset:

With the entered facts prepared, you call tf.Statistics.Dataset.From_tensor_slices() and skip the input tensors as arguments. The function uses the enter tensors to generate a Dataset object.

3. Tensor Slicing:

Each input tensor is divided into its element in the dataset by the function along the first dimension (axis 0). This implies that if your dataset has n samples, it will produce a dataset with n items.

4. Element Representation:

Each element in the dataset represents an appropriate slice from the input tensors. For instance, if the first detail inside the dataset displays the first sample from the input tensors, that detail will have an identical shape to a single sample in the enter tensors.

5. Example Usage:

An example of how to use tf. data.Dataset.from_tensor_slices():

import tensorflow as tf

import numpy as np

images = np.array([...]) # Shape: (num_samples, image_height, image_width, num_channels)

dataset = tf. data.Dataset.from_tensor_slices(images)

6. Data pipeline:

Once the dataset has been created, it may be used in your data pipeline for model testing or training. To preprocess and enhance the data as necessary, you may apply several transformations to the dataset using techniques like map(), batch(), shuffle(), and more.

dataset = dataset.shuffle(buffer_size=1000)

dataset = dataset.batch(batch_size=32)

dataset = dataset.prefetch(buffer_size=tf.data.experimental.AUTOTUNE)

7. Iterating over the Dataset:

The dataset is used to train or test models by being iterated through an iterator made from the dataset.

iterator = dataset.as_numpy_iterator()

for batch in iterator:

In summary, tf. data.Dataset.From_tensor_slices() is a robust TensorFlow characteristic that permits you to show in-reminiscence data into a Dataset object, which could eventually be effectively analyzed and applied as part of your system mastering workflow. Building dependable and effective records pipelines for education and analyzing your system studying models is made feasible through the dataset. This is the outcome of your efforts.

Getting data ready and processed efficiently for machine learning models is critical—the data. A quick and practical approach to building data input pipelines is using TensorFlow's dataset API. It enables the effective management of massive data processing while enabling the creation of complicated neural networks. It makes it easy to preprocess massive datasets, interact with them, and seamlessly contain them in your gadget-mastering fashions.

The tf.Statistics.Dataset.From_tensor_slices() function is one of the strategies in the tf.Statistics.Dataset API. It generally converts in-memory facts (like NumPy arrays or Python lists) into a Dataset object that can be effortlessly processed and applied for version education or assessment.

It effectively slices the input tensors along the first dimension to produce distinct items in the dataset. Each element in the dataset represents a matching slice from the input tensor(s). TensorFlow data pipelines frequently employ this function to prepare data for model training and assessment.

Python code demonstrating how to use tf.data.Dataset.from_tensor_slices() to construct a dataset from in-memory data and set up a basic data pipeline for model training:

import tensorflow as tf

import numpy as np

# Step 1: Prepare the input data (Assume you have a dataset of images as NumPy arrays)

images = np.random.rand(100, 28, 28, 3)

labels = np.random.randint(0, 10, size=(100,))

# Step 2: Create a Dataset from the images and labels

image_dataset = tf.data.Dataset.from_tensor_slices(images)

label_dataset = tf.data.Dataset.from_tensor_slices(labels)

# Step 3: Combine the image and label datasets into a single dataset

dataset = tf.data.Dataset.zip((image_dataset, label_dataset))

# Step 4: Data Pipeline - Shuffle, batch, and prefetch the data

batch_size = 32

buffer_size = 1000

# Shuffle the dataset (optional but recommended for training)

dataset = dataset.shuffle(buffer_size=buffer_size)

# Batch the data

dataset = dataset.batch(batch_size=batch_size)

# Prefetch the data for better performance

dataset = dataset.prefetch(buffer_size=tf.data.experimental.AUTOTUNE)

# Step 5: Create an iterator from the dataset

iterator = dataset.as_numpy_iterator()

# Step 6: Iterate over the dataset (Example usage during model training)

for batch_images, batch_labels in iterator:

# Here, you would perform model training or evaluation using the current batch of data

print("Batch Images Shape:", batch_images.shape)

print("Batch Labels Shape:", batch_labels.shape)

Note: In this situation, we've got used random facts for example purposes. Replace the instance records with your actual information (e.g., loading pics from files)and regulate the version training code. We must install the required modules to run and get the exact output.

Verify that your data is substituted into the code's picture and label arrays. The code creates a data pipeline by mixing the label and picture datasets, batching, prefetching, and shuffling the data for optimal model training performance. You may swap out the print statements during the iteration with your actual model training code to train your machine-learning model using the supplied data batches.

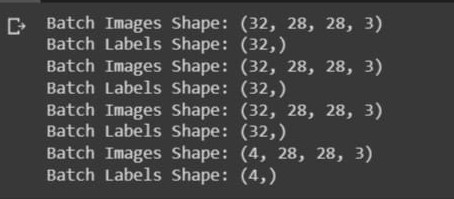

Output:

Printing the shape of each batch of photos and labels as the function iterates over the dataset in this example. Since the batch length is set to 32, you can see that each batch includes 32 images and the 32 labels that accompany each of these pics. The shape of the label data is (batch_size,) and the form of the photograph records is (batch_size, image_height, image_width, num_channels) (as an example, (32, 28, 28, three)). Each batch includes 32 snapshots, every with a size of 28 pixels in height, 28 pixels in width, and three channels of color, at the side of the 32 labels that go along with every photo.

Concepts of Tensorflow:

1. Computational Graphs:

The notion of computational graphs is at the heart of TensorFlow. TensorFlow creates a graph model of the calculations that will be carried out rather than directly. The mathematical processes that make up the nodes and edges of the graph are represented by the data that moves between them. TensorFlow can efficiently execute calculations on CPUs and GPUs thanks to the optimization and parallelization provided by this graph-based technique.

2. Tensors:

Tensors are multi-dimensional arrays and are the inspiration for the term TensorFlow. Tensors, which can have different ranks (number of dimensions) and data kinds, are the essential building elements of TensorFlow. Tensors with a rank of 0 are scalars, and ones with one rank are vectors, matrices, etc. Tensor representations are used for all kinds of statistics: input data, model parameters, and computation-associated intermediate values.

3. Sessions and Eager Execution:

To achieve results with older versions of TensorFlow (1.x), you had to design and execute a computational graph within a session. TensorFlow 2.0 and subsequent versions, however, enable eager execution by default. Without first creating a computational graph, eager execution enables instantaneous operation evaluation. TensorFlow now resembles other imperative programming paradigms in a more natural and Pythonic way.

4. Keras Integration:

The fundamental library of TensorFlow includes Keras, a high-stage neural network API. To assemble complicated neural networks with the least boilerplate code, Keras affords a consumer-pleasant interface. TensorFlow is accessible to both novice and expert researchers because of the tf. Keras module, which makes model creation, schooling, and assessment simple.

5. Automatic Differentiation:

TensorFlow's capacity for automatic differentiation is one of its main advantages. The backpropagation technique is used to automatically calculate the gradients of the loss function concerning the model's parameters during model training. The model's parameters are then updated, and the model's performance is enhanced by optimizers using these gradients.

6. Optimizers:

TensorFlow gives some optimization techniques, along with Stochastic Gradient Descent (SGD), Adam, RMSprop, and others, to replace model parameters throughout training. Depending on your model and venture, you can pick the optimizer that works excellently or lay out your own if necessary.

7. Callbacks and TensorBoard:

For monitoring and visualizing model schooling, TensorFlow provides sturdy tools. Callbacks through education allow you to perform certain obligations like preserving model checkpoints or enhancing learning costs in reaction to particular circumstances. TensorBoard is a web-based visualization tool that allows you to monitor statistics, examine graphs of the computational graph, and examine the effectiveness of your models.

8. Deployment Options:

Mobile devices, online browsers, and edge devices are just a few venues where TensorFlow models may be used. TensorFlow.js allows models to be executed directly in the browser, whereas TensorFlow Lite is intended for mobile and embedded devices.

9. Distributed Training:

With the help of TensorFlow, you can train big models on several GPUs or computers simultaneously. This distributed training capacity is essential to scale machine learning activities to handle massive data and sophisticated models

10. Community and Ecosystem:

A sizable and vibrant community supports the vast ecosystem of TensorFlow. Modern solutions are simpler to develop because of pre-trained models, frameworks, and tools available that are built on top of TensorFlow.

In conclusion, TensorFlow is a flexible and robust deep studying library that offers a vast range of gear and skills for developing and refining gadgets and gaining knowledge of models. Researchers and developers within the system learning community often use it because of its robust computational graph execution, computerized differentiation, help for heterogeneous hardware, and availability on plenty of platforms. TensorFlow has the adaptability and scalability to take on numerous machine learning tasks, regardless of whether you are a novice or an experienced practitioner.

Applications of Tensorflow:

1. Computer Vision:

- Image Classification:

TensorFlow is frequently used for image classification jobs to categorize photos into predetermined categories. Convolutional neural networks (CNNs) and other models are frequently used for this job, and TensorFlow offers tools for effectively creating, training, and deploying such models.

- Object Detection:

Using bounding boxes to localize things inside the picture, object detection using TensorFlow includes categorizing the objects and their classification. Modern object detection models like Single Shot Multibox Detector (SSD) and You Only Look Once (YOLO) may be integrated into complicated detection tasks using TensorFlow's detection API, which makes it simpler to complete these jobs.

- Semantic Segmentation:

TensorFlow may be applied in this scenario to categorize every pixel in a picture. Tasks like autonomous driving require models to understand their surroundings, making semantic segmentation essential thoroughly.

- Image Generation:

Using Variational Autoencoders (VAEs) and Generative Adversarial Networks (GANs), TensorFlow may produce pictures. For producing realistic pictures from random noise, GANs, in particular, are well-liked and have many inventive uses.

2. Natural Language Processing (NLP):

- Text classification:

TensorFlow may create models that divide text data into categories, such as topic classification or sentiment analysis (positive/negative).

- Named Entity Recognition (NER):

TensorFlow is helpful for named entity recognition (NER) jobs, where the objective is to recognize entities like names, places, and dates in a given text.

- Machine Translation:

TensorFlow may be used for Sequence-to-Sequence models in machine translation jobs, facilitating translation across several languages.

- Text Generation:

Text generation is a feature of TensorFlow's recurrent neural networks (RNNs) and transformer models, which makes them appropriate for projects like chatbots and language modeling.

3. Speech Recognition and Synthesis:

- Speech Recognition:

TensorFlow can be used for Automatic Speech Recognition (ASR) obligations, which aims to transform spoken language into written textual content.

- Text-to-Speech (TTS):

TensorFlow may create artificial speech from textual content input for text-to-speech (TTS) packages like voice assistants and audiobook narration.

4. Recommender Systems:

- TensorFlow may be used to create recommender systems that provide consumers with tailored suggestions based on their previous behavior and preferences.

- Techniques like matrix factorization and collaborative filtering, which can be used in TensorFlow, are frequently used in recommender systems.

5. Healthcare:

- Applications of TensorFlow in the healthcare industry include the study of medical images, the diagnosis of diseases, and the development of new drugs.

- TensorFlow models may be used to identify anomalies in medical pictures like X-rays and MRIs to facilitate early diagnosis and treatment planning.

- TensorFlow can be used in the drug development process to forecast a prospective medication's effectiveness or to examine a potential medicine's molecular structure.

6. Autonomous Vehicles:

- A crucial element in creating autonomous driving systems is TensorFlow. Object detection, lane detection, and traffic sign recognition are some tasks it employs.

- Autonomous cars can sense their surroundings and quickly make safe driving judgments by combining TensorFlow models with sensors and cameras.

7. Finance:

- Algorithmic trading, risk analysis, and fraud detection are just a few of the financial modeling uses for TensorFlow.

- To evaluate financial data, spot probable fraud trends, and forecast stock values, TensorFlow models may be employed.

8. Robotics:

- Robot control, object manipulation, and path planning are all possible uses of TensorFlow in robotics.

- Thanks to reinforcement learning and TensorFlow, robots can learn from their behaviors and modify them depending on feedback from the environment.

9. Game Playing:

- It has proven possible to build agents that can play board games and video games using TensorFlow.

- Agents can learn the best game-playing methods thanks to deep neural networks and reinforcement learning algorithms.

These are just a handful of the severa applications for which TensorFlow can be used. Researchers and builders from many fields turn to the library for its adaptability, scalability, and simplicity when resolving complex machine-getting-to-know and deep learning demanding situations.

Advantages:

1. Flexibility and Versatility:

TensorFlow offers an adaptable and versatile framework for creating various machine learning models, including deep neural networks. Researchers and developers may test alternative designs and optimization strategies using its broad APIs and tools.

2. Effective Parallel Processing:

TensorFlow enables effective parallel processing across CPUs and GPUs through the execution and optimization of its computational graph. As a result, it may be used to train big models on solid hardware.

3. Automatic Differentiation:

By employing the backpropagation algorithm to produce gradients automatically, TensorFlow speeds up model training and makes it easier to incorporate complex optimization techniques like stochastic gradient descent (SGD).

4. Rich Ecosystem:

TensorFlow has a solid industrial backer base, a sizable user community, and a thriving environment. As a result, many tools, pre-trained models, and third-party libraries become available that may be quickly included in applications.

5. High-Level APIs:

TensorFlow includes high-level APIs, like Keras, to streamline model creation and reduce boilerplate code. To design and train models, Keras offers a simple, straightforward interface.

6. Distributed Computing:

TensorFlow's support for distributed computing enables users to train models over several GPUs or computers. For deep learning activities to be scaled up to handle massive datasets and intricate models, this is crucial.

7. TensorBoard Visualization:

TensorBoard, the tool for TensorFlow, offers real-time and interactive visualizations of model performance, computational graphs, and training metrics. Practical model analysis and debugging are made possible by this.

Disadvantages:

1. Steep Learning Curve:

TensorFlow can have a high learning curve, particularly for those new to machine learning and deep learning. Understanding ideas like graph optimizations, sessions (in previous versions), and computational graphs could take some effort.

2. Verbose Code:

Writing complicated models in TensorFlow can produce verbose code, especially with low-level APIs. Due to this rhetoric, the code may become more challenging to comprehend and maintain.

3. Hardware Requirements:

TensorFlow training deep learning models frequently takes substantial processing and memory resources, which might be a barrier for people or organizations with minimal funding.

4. Debugging Complexity:

TensorFlow code debugging may be difficult, mainly when working with big models and intricate structures. Errors might occur due to problems with the data pipeline, model setup, or graph formation.

5. Version compatibility:

TensorFlow periodically makes changes between versions that may cause problems with older code or previously trained models. Code migration to newer versions may call for modifications.

6. Resource Intensive:

TensorFlow may use many resources, including memory and computing power, which may prevent it from being used on devices with limited resources, such as smartphones and edge devices.

7. The Complexity of Customization:

Although TensorFlow offers flexibility, some portions of the framework might be hard to adjust and much less user-pleasant, specifically when using low-level APIs.

Ultimately, TensorFlow is an effective and well-liked deep getting-to-know framework with various benefits, including adaptability, effectiveness, and an enormous ecosystem. However, it has numerous drawbacks, including a high mastering curve and possible aid shortages. Judging whether TensorFlow is the best option for a particular machine learning assignment requires careful analysis of the unique use case and project needs.