Structured vs Unstructured Ward in Hierarchical Clustering using Scikit Learn

Introduction

A well-liked and frequently used technique for classifying and clustering data is hierarchical clustering. In hierarchical clustering, data points are categorized according to their similarity, with similar data points placed in the same cluster and dissimilar data points kept in distinct clusters.

How to assess the degree of similarity between data points and how to combine clusters is one of the crucial decisions in hierarchical clustering. The two most popular techniques for this in Scikit-Learn are unstructured Ward and structured Ward.

Structured Ward Clustering

- A ward linkage criteria is used by the hierarchical clustering technique known as "structured ward" to gauge how related the data points are to one another.

- This criterion evaluates the sum of squared distances between every pair of data points in the two combined clusters.

- This approach seeks to produce compact, well-separated clusters by minimizing the overall within-cluster variance.

Unstructured Ward Clustering

- Unstructured Ward is a hierarchical clustering technique that employs a related ward linkage criterion but uses a different distance metric.

- Unstructured Ward uses a Euclidean distance metric to gauge how comparable two data points are rather than calculating the sum of squared distances.

- More flexible clusters are produced, enabling more intricate shapes and structures.

Conditional Use Cases

- Overall, wards are a helpful technique in scikit-learn for hierarchical clustering, whether they are structured or unstructured.

- The qualities of the data and the required cluster features determine which of the two methods should be used.

- A structured Ward is often more appropriate for data with compact and well-separated clusters.

- Data with more intricate and flexible cluster structures are more suitable for unstructured Ward.

- Let's look at it in the example below.

- The silhouette score will show which algorithms perform better on challenging datasets.

A Swiss roll dataset is created, and hierarchical clustering is applied to their location.

The clustering is only allowed in the k-Nearest Neighbours network in the second stage, which is a hierarchical clustering with a prior structure. In the first step, hierarchical clustering is carried out without connectivity limitations on the structure and is only based on distance.

Some of the clusters that were learned without connection restrictions did not adhere to the Swiss roll's structure and instead extended across various folds of the manifolds. On the other hand, the clusters create a lovely parcellation of the Swiss roll when opposing connection limitations.

To utilize hierarchical clustering with both structured and unstructured Ward in Scikit-learn, consider the following example:

Structured Ward Clustering Python code is as follows:

from sklearn.datasets import make_blobs

from sklearn.cluster import AgglomerativeClustering

from sklearn.metrics import silhouette_score

X, y = make_blobs(n_samples=10000, n_features=8, centers=5)

# Create a structured Ward hierarchical clustering object

structured_ward = AgglomerativeClustering(n_clusters=5, linkage='ward')

structured_ward.fit(X)

print("Structured Ward labels:", structured_ward.labels_)

print(silhouette_score(X, structured_ward.labels_))

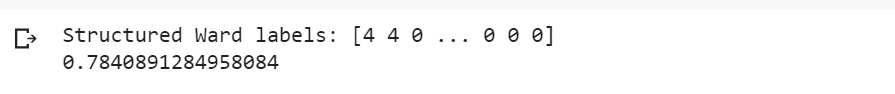

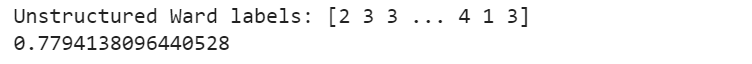

Output

Unstructured Ward Clustering Python code is as follows:

from sklearn.datasets import make_blobs

from sklearn.cluster import AgglomerativeClustering

X, y = make_blobs(n_samples=10000,

n_features=8, centers=5)

# Create an unstructured Ward hierarchical clustering object

unstructured_ward = AgglomerativeClustering(n_clusters=5, linkage='ward', affinity='euclidean')

unstructured_ward.fit(X)

print("Unstructured Ward labels:", unstructured_ward.labels_)

print(silhouette_score(X, unstructured_ward.labels_))

Output

- The AgglomerativeClustering class is used in this code to do hierarchical clustering with both structured and unstructured Ward after some test data is generated using the make_blobs function.

- The structured_ward object uses the sum of squared distances with the default ward linkage condition.

- The unstructured_ward object employs the Euclidean distance metric.

- The labels for every data point for each clustering algorithm are then printed.

- In the case mentioned above, a complex dataset is used, and an unstructured ward algorithm outperforms a structured ward algorithm by a significant margin.

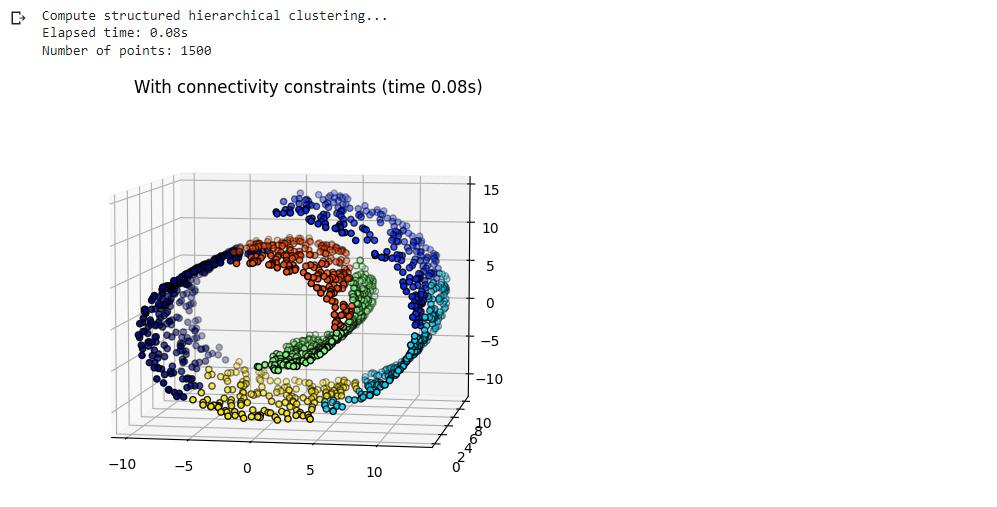

Example Code for Structured Hierarchical Cluster

import time as time

import mpl_toolkits.mplot3d # noqa: F401

import numpy as np

from sklearn.neighbors import kneighbors_graph

connectivity = kneighbors_graph(X, n_neighbors=10, include_self=False)

print("Compute structured hierarchical clustering...")

st = time.time()

ward = AgglomerativeClustering(

n_clusters=6, connectivity=connectivity, linkage="ward"

).fit(X)

elapsed_time = time.time() - st

label = ward.labels_

print(f"Elapsed time: {elapsed_time:.2f}s")

print(f"Number of points: {label.size}")

# Plotting the structured hierarchical clusters.

fig2 = plt.figure()

ax2 = fig2.add_subplot(121, projection="3d", elev=7, azim=-80)

ax2.set_position([0, 0, 0.95, 1])

for l in np.unique(label):

ax2.scatter(

X[label == l, 0],

X[label == l, 1],

X[label == l, 2],

color=plt.cm.jet(float(l) / np.max(label + 1)),

s=20,

edgecolor="k",

)

fig2.suptitle(f"With connectivity constraints (time {elapsed_time:.2f}s)")

plt.show()

Output

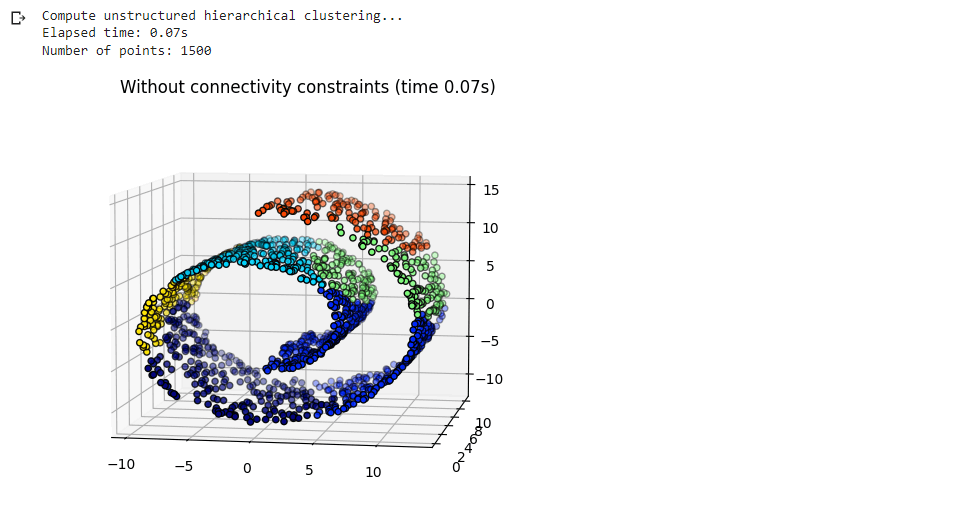

Example Code for Unstructured Hierarchical Cluster

import time as time

import mpl_toolkits.mplot3d # noqa: F401

import numpy as np

from sklearn.datasets import make_swiss_roll

n_samples = 1500

noise = 0.05

X, _ = make_swiss_roll(n_samples, noise=noise)

# Make it thinner

X[:, 1] *= 0.5

from sklearn.cluster import AgglomerativeClustering

print("Compute unstructured hierarchical clustering...")

st = time.time()

ward = AgglomerativeClustering(n_clusters=6, linkage="ward").fit(X)

elapsed_time = time.time() - st

label = ward.labels_

print(f"Elapsed time: {elapsed_time:.2f}s")

print(f"Number of points: {label.size}")

# Plotting the unstructured hierarchical clusters.

import matplotlib.pyplot as plt

fig1 = plt.figure()

ax1 = fig1.add_subplot(111, projection="3d", elev=7, azim=-80)

ax1.set_position([0, 0, 0.95, 1])

for l in np.unique(label):

ax1.scatter(

X[label == l, 0],

X[label == l, 1],

X[label == l, 2],

color=plt.cm.jet(float(l) / np.max(label + 1)),

s=20,

edgecolor="k",

)

_ = fig1.suptitle(f"Without connectivity constraints (time {elapsed_time:.2f}s)")

Output