How can Tensorflow be used to download and explore the Iliad dataset using Python?

The Iliad dataset can be downloaded and explored using TensorFlow in several ways. To ensure you fully grasp each step, we'll thoroughly review everything. The ancient Greek epic poetry "The Iliad," credited to Homer, is included in the Iliad dataset.

Introduction to the Iliad Dataset:

The Iliad dataset is a treasure trove of ancient Greek literature, encompassing the epic poem "The Iliad," traditionally attributed to the legendary poet Homer. This dataset is a valuable resource for researchers, historians, linguists, and literature enthusiasts who seek to delve into one of the most celebrated works in Western literature.

Overview of "The Iliad":

- A Greek epic poem called "The Iliad" is thought to have been written around the eighth century BCE. It tells the story of the mythological struggle between the Greeks (Achaeans) and the Trojans, known as the Trojan War.

- The poem is written in dactylic hexameter, a rhythmic and lyrical form common to ancient Greek poetry, and covers 24 books, sometimes known as "books" or "chapters," with around 15,693 lines in each book.

Availability and Retrieval of the Iliad Dataset:

- The Iliad dataset is often accessible through digital repositories and platforms dedicated to public-domain literary works, such as Project Gutenberg. Researchers and developers can download the text of "The Iliad" in various formats, including plain text, HTML, or PDF.

- One can use web scraping techniques to retrieve the Iliad dataset in Python to access the text from the Project Gutenberg website. Libraries such as Requests and BeautifulSoup can be employed to fetch and parse the content.

Step-by-step process:

Step 1: Installing TensorFlow and Other Required Libraries

- Make sure Python is set up on your machine before continuing. It is accessible at https://www.python.org/downloads/.

- Install the necessary libraries with pip after installing Python by opening a terminal or command prompt:

pip install tensorflow

pip install requests

pip install beautifulsoup4

Step 2: Import Required Libraries

The libraries required for our tasks will be imported in this stage. While requests and BeautifulSoup will be employed for site scraping, TensorFlow will be utilized for data download.

import tensorflow as tf

import requests

from bs4 import BeautifulSoup

Step 3: Web Scraping to Get the Iliad Text

Using web scraping, we'll obtain "The Iliad"'s text from a dependable source. The Iliad is one of the many literary works in the public domain that may be found on Project Gutenberg. The Iliad's Project Gutenberg page's URL will be used to retrieve the text.

def download_iliad():

url = "http://www.gutenberg.org/cache/epub/6130/pg6130.txt"

response = requests.get(url)

if response.status_code == 200:

return response.text

else:

print("Failed to download the Iliad.")

return None

iliad_text = download_iliad()

Step 4: Preprocessing the Iliad Text

Before beginning the exploration, we must preprocess the text to eliminate any undesired characters or parts. Depending on its structure, we'll tidy up the content, remove any unnecessary metadata, and divide it into paragraphs or chapters.

def preprocess_text(text):

start_index = text.find("BOOK I")

text = text[start_index:]

end_index = text.find("End of Project Gutenberg's")

text = text[:end_index]

text = text.replace("\r\n", " ").replace(" ", " ").strip()

paragraphs = text.split("BOOK ")

paragraphs = [p.strip() for p in paragraphs if p.strip()]

return paragraphs

iliad_paragraphs = preprocess_text(iliad_text)

Step 5: Exploring the Iliad Dataset

We can begin examining the dataset with the preprocessed Iliad text in hand. We could determine the text's word count, count the paragraphs (or chapters), or select a few key portions.

num_paragraphs = len(iliad_paragraphs)

total_characters = sum(len(paragraph) for paragraph in iliad_paragraphs)

longest_paragraph = max(iliad_paragraphs, key=len)

shortest_paragraph = min(iliad_paragraphs, key=len)

print("First 500 characters of the Iliad:\n", iliad_paragraphs[0][:500])

print("Number of paragraphs (books):", num_paragraphs)

print("Total characters in the Iliad:", total_characters)

print("Longest paragraph:\n", longest_paragraph)

print("Shortest paragraph:\n", shortest_paragraph)

Step 6: Additional Exploration or Analysis

There are several opportunities for future investigation with the Iliad dataset at hand. With the help of TensorFlow's deep learning capabilities, you may conduct sentiment analysis, generate word frequency distributions, or develop a model for text synthesis.

For those who want to study further into one of the most renowned pieces of Western literature, this dataset is an invaluable tool for academics, historians, linguists, and literary enthusiasts.

Always keep in mind that the fundamentals of downloading and exploring the Iliad dataset using TensorFlow are covered in this tutorial. You can modify your analysis and modeling in accordance with your unique requirements.

Complete code of the above process:

import tensorflow as tf

import requests

from bs4 import BeautifulSoup

import re

from collections import Counter

def download_iliad():

url = "http://www.gutenberg.org/cache/epub/6130/pg6130.txt"

response = requests.get(url)

if response.status_code == 200:

return response.text

else:

print("Failed to download the Iliad.")

return None

def preprocess_text(text):

# Remove the header information (Project Gutenberg metadata).

start_index = text.find("BOOK I")

text = text[start_index:]

# Remove Project Gutenberg's footer information.

end_index = text.find("End of Project Gutenberg's")

text = text[:end_index]

# Replace newline characters and extra spaces.

text = text.replace("\r\n", " ").replace(" ", " ").strip()

# Split the text into paragraphs (BOOK I, BOOK II, etc.).

paragraphs = text.split("BOOK ")

# Remove any empty strings from the list.

paragraphs = [p.strip() for p in paragraphs if p.strip()]

return paragraphs

def explore_dataset(paragraphs):

# Calculate the total number of paragraphs (books).

num_paragraphs = len(paragraphs)

# Calculate the total number of characters in the entire Iliad.

total_characters = sum(len(paragraph) for paragraph in paragraphs)

# Find the longest and shortest paragraphs.

longest_paragraph = max(paragraphs, key=len)

shortest_paragraph = min(paragraphs, key=len)

return num_paragraphs, total_characters, longest_paragraph, shortest_paragraph

def nlp_word_frequency(paragraphs):

# Combine all paragraphs into a single text.

combined_text = " ".join(paragraphs)

# Remove punctuation and convert text to lowercase.

clean_text = re.sub(r'[^\w\s]', '', combined_text).lower()

# Tokenize the text into words.

words = clean_text.split()

# Count the frequency of each word using Counter.

word_freq = Counter(words)

return word_freq

if __name__ == "__main__":

# Step 1: Download the Iliad dataset

iliad_text = download_iliad()

if not iliad_text:

exit()

# Step 2: Preprocess the Iliad text

iliad_paragraphs = preprocess_text(iliad_text)

# Step 3: Explore the Iliad dataset

num_paragraphs, total_characters, longest_paragraph, shortest_paragraph = explore_dataset(iliad_paragraphs)

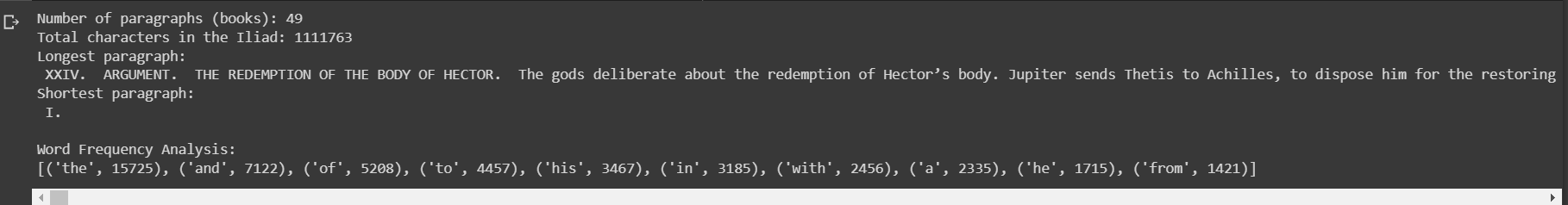

print("Number of paragraphs (books):", num_paragraphs)

print("Total characters in the Iliad:", total_characters)

print("Longest paragraph:\n", longest_paragraph)

print("Shortest paragraph:\n", shortest_paragraph)

# Step 4: Perform NLP task - Word Frequency Analysis

word_freq = nlp_word_frequency(iliad_paragraphs)

print("\nWord Frequency Analysis:")

# Print the top 10 most common words and their frequencies

print(word_freq.most_common(10))

Output:

The provided code downloads the Iliad dataset from Project Gutenberg, preprocesses the text, explores its components (number of paragraphs, total characters, longest and shortest paragraphs), and performs a simple word frequency analysis. You can further expand the code to explore more aspects of the dataset, such as sentiment analysis, part-of-speech tagging, or building a language model using TensorFlow.

Applications:

1.Literary Analysis and Research:

The Iliad dataset may be used to analyze the poem in-depth by academics and book lovers. To obtain a more excellent knowledge of the work, they examine themes, character development, and narrative structures and contrast various translations or editions.

2.Natural Language Processing (NLP) Research:

Researchers working on challenges involving natural language processing can benefit from the Iliad dataset. NLP algorithms can be trained on the text to conduct tasks like sentiment analysis, language modeling, named entity identification, and part-of-speech tagging.

3.Language Modeling and Text Generation:

Using deep learning frameworks like TensorFlow, researchers can build language models that learn from the Iliad dataset to generate new text that imitates the style and language of the ancient epic. This can lead to the creation of automated storytelling systems or interactive chatbots with a historical flair.

4.Educational and Interactive Applications:

The Iliad dataset can be used to create educational materials, interactive apps, and games that immerse users in the ancient Greek world. These applications can help students and the general public engage with classical literature in a modern and interactive way.

5.Historical and Cultural Studies:

The Iliad is a significant historical and cultural monument and a literary masterpiece. Academics and historians can study the work to learn more about ancient Greek society, warfare, mythology, and cultural values.

6.Sentiment Analysis of Literary Works:

By examining the feelings conveyed in various sections of the Iliad, academics may delve into the emotional underpinnings of the epic's characters and events. This might offer fresh insights into the thoughts and emotions that the characters have throughout the narrative.

The Python Iliad dataset provides a fascinating voyage into the realm of ancient Greek literature. Researchers and specialists may produce fresh text, get new insights, and bring this age-old epic closer to contemporary audiences using Python's data manipulation, NLP, and deep learning skills. The Iliad dataset is an intriguing field for investigation and study due to the intersection of historical importance and cutting-edge technology analysis.